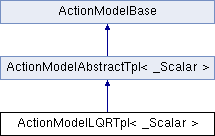

Linear-quadratic regulator (LQR) action model. More...

#include <lqr.hpp>

Public Types | |

| typedef ActionDataAbstractTpl< Scalar > | ActionDataAbstract |

| typedef ActionModelAbstractTpl< Scalar > | Base |

| typedef ActionDataLQRTpl< Scalar > | Data |

| typedef MathBaseTpl< Scalar > | MathBase |

| typedef MathBase::MatrixXs | MatrixXs |

| typedef StateVectorTpl< Scalar > | StateVector |

| typedef MathBase::VectorXs | VectorXs |

Public Types inherited from ActionModelAbstractTpl< _Scalar > Public Types inherited from ActionModelAbstractTpl< _Scalar > | |

| typedef ActionDataAbstractTpl< Scalar > | ActionDataAbstract |

| typedef MathBaseTpl< Scalar > | MathBase |

| typedef ScalarSelector< Scalar >::type | ScalarType |

| typedef StateAbstractTpl< Scalar > | StateAbstract |

| typedef MathBase::VectorXs | VectorXs |

Public Member Functions | |

| ActionModelLQRTpl (const ActionModelLQRTpl ©) | |

| Copy constructor. | |

| ActionModelLQRTpl (const MatrixXs &A, const MatrixXs &B, const MatrixXs &Q, const MatrixXs &R, const MatrixXs &N) | |

| Initialize the LQR action model. | |

| ActionModelLQRTpl (const MatrixXs &A, const MatrixXs &B, const MatrixXs &Q, const MatrixXs &R, const MatrixXs &N, const MatrixXs &G, const MatrixXs &H, const VectorXs &f, const VectorXs &q, const VectorXs &r, const VectorXs &g, const VectorXs &h) | |

| Initialize the LQR action model. | |

| ActionModelLQRTpl (const MatrixXs &A, const MatrixXs &B, const MatrixXs &Q, const MatrixXs &R, const MatrixXs &N, const VectorXs &f, const VectorXs &q, const VectorXs &r) | |

| Initialize the LQR action model. | |

| ActionModelLQRTpl (const std::size_t nx, const std::size_t nu, const bool drift_free=true) | |

| Initialize the LQR action model. | |

| virtual void | calc (const std::shared_ptr< ActionDataAbstract > &data, const Eigen::Ref< const VectorXs > &x) override |

| Compute the total cost value for nodes that depends only on the state. | |

| virtual void | calc (const std::shared_ptr< ActionDataAbstract > &data, const Eigen::Ref< const VectorXs > &x, const Eigen::Ref< const VectorXs > &u) override |

| Compute the next state and cost value. | |

| virtual void | calcDiff (const std::shared_ptr< ActionDataAbstract > &data, const Eigen::Ref< const VectorXs > &x) override |

| Compute the derivatives of the cost functions with respect to the state only. | |

| virtual void | calcDiff (const std::shared_ptr< ActionDataAbstract > &data, const Eigen::Ref< const VectorXs > &x, const Eigen::Ref< const VectorXs > &u) override |

| Compute the derivatives of the dynamics and cost functions. | |

| template<typename NewScalar > | |

| ActionModelLQRTpl< NewScalar > | cast () const |

| Cast the LQR model to a different scalar type. | |

| virtual bool | checkData (const std::shared_ptr< ActionDataAbstract > &data) override |

| Checks that a specific data belongs to this model. | |

| virtual std::shared_ptr< ActionDataAbstract > | createData () override |

| Create the action data. | |

| DEPRECATED ("Use get_A", const MatrixXs &get_Fx() const { return get_A();}) DEPRECATED("Use get_B" | |

| DEPRECATED ("Use get_f", const VectorXs &get_f0() const { return get_f();}) DEPRECATED("Use get_q" | |

| DEPRECATED ("Use get_R", const MatrixXs &get_Lxu() const { return get_R();}) DEPRECATED("Use get_N" | |

| DEPRECATED ("Use get_r", const VectorXs &get_lu() const { return get_r();}) DEPRECATED("Use get_Q" | |

| DEPRECATED ("Use set_LQR", void set_f0(const VectorXs &f) { set_LQR(A_, B_, Q_, R_, N_, G_, H_, f, q_, r_, g_, h_);}) DEPRECATED("Use set_LQR" | |

| DEPRECATED ("Use set_LQR", void set_Fx(const MatrixXs &A) { set_LQR(A, B_, Q_, R_, N_, G_, H_, f_, q_, r_, g_, h_);}) DEPRECATED("Use set_LQR" | |

| DEPRECATED ("Use set_LQR", void set_lu(const VectorXs &r) { set_LQR(A_, B_, Q_, R_, N_, G_, H_, f_, q_, r, g_, h_);}) DEPRECATED("Use set_LQR" | |

| DEPRECATED ("Use set_LQR", void set_Luu(const MatrixXs &R) { set_LQR(A_, B_, Q_, R, N_, G_, H_, f_, q_, r_, g_, h_);}) DEPRECATED("Use set_LQR" | |

| const MatrixXs & | get_A () const |

| Return the state matrix. | |

| const MatrixXs & | get_B () const |

| Return the input matrix. | |

| const VectorXs & | get_f () const |

| Return the dynamics drift. | |

| const MatrixXs & | get_Fu () const |

| const MatrixXs & | get_G () const |

| Return the state-input inequality constraint matrix. | |

| const VectorXs & | get_g () const |

| Return the state-input inequality constraint bias. | |

| const MatrixXs & | get_H () const |

| Return the state-input equality constraint matrix. | |

| const VectorXs & | get_h () const |

| Return the state-input equality constraint bias. | |

| const MatrixXs & | get_Luu () const |

| const VectorXs & | get_lx () const |

| const MatrixXs & | get_Lxx () const |

| const MatrixXs & | get_N () const |

| Return the state-input weight matrix. | |

| const MatrixXs & | get_Q () const |

| Return the state weight matrix. | |

| const VectorXs & | get_q () const |

| Return the state weight vector. | |

| const MatrixXs & | get_R () const |

| Return the input weight matrix. | |

| const VectorXs & | get_r () const |

| Return the input weight vector. | |

| virtual void | print (std::ostream &os) const override |

| Print relevant information of the LQR model. | |

| void | set_Fu (const MatrixXs &B) |

| void | set_LQR (const MatrixXs &A, const MatrixXs &B, const MatrixXs &Q, const MatrixXs &R, const MatrixXs &N, const MatrixXs &G, const MatrixXs &H, const VectorXs &f, const VectorXs &q, const VectorXs &r, const VectorXs &g, const VectorXs &h) |

| Modify the LQR action model. | |

| void | set_lx (const VectorXs &q) |

| void | set_Lxu (const MatrixXs &N) |

| void | set_Lxx (const MatrixXs &Q) |

Public Member Functions inherited from ActionModelAbstractTpl< _Scalar > Public Member Functions inherited from ActionModelAbstractTpl< _Scalar > | |

| ActionModelAbstractTpl (const ActionModelAbstractTpl< Scalar > &other) | |

| Copy constructor. | |

| ActionModelAbstractTpl (std::shared_ptr< StateAbstract > state, const std::size_t nu, const std::size_t nr=0, const std::size_t ng=0, const std::size_t nh=0, const std::size_t ng_T=0, const std::size_t nh_T=0) | |

| Initialize the action model. | |

| virtual const VectorXs & | get_g_lb () const |

| Return the lower bound of the inequality constraints. | |

| virtual const VectorXs & | get_g_ub () const |

| Return the upper bound of the inequality constraints. | |

| bool | get_has_control_limits () const |

| Indicates if there are defined control limits. | |

| virtual std::size_t | get_ng () const |

| Return the number of inequality constraints. | |

| virtual std::size_t | get_ng_T () const |

| Return the number of inequality terminal constraints. | |

| virtual std::size_t | get_nh () const |

| Return the number of equality constraints. | |

| virtual std::size_t | get_nh_T () const |

| Return the number of equality terminal constraints. | |

| std::size_t | get_nr () const |

| Return the dimension of the cost-residual vector. | |

| std::size_t | get_nu () const |

| Return the dimension of the control input. | |

| const std::shared_ptr< StateAbstract > & | get_state () const |

| Return the state. | |

| const VectorXs & | get_u_lb () const |

| Return the control lower bound. | |

| const VectorXs & | get_u_ub () const |

| Return the control upper bound. | |

| virtual void | quasiStatic (const std::shared_ptr< ActionDataAbstract > &data, Eigen::Ref< VectorXs > u, const Eigen::Ref< const VectorXs > &x, const std::size_t maxiter=100, const Scalar tol=Scalar(1e-9)) |

| Computes the quasic static commands. | |

| VectorXs | quasiStatic_x (const std::shared_ptr< ActionDataAbstract > &data, const VectorXs &x, const std::size_t maxiter=100, const Scalar tol=Scalar(1e-9)) |

| void | set_g_lb (const VectorXs &g_lb) |

| Modify the lower bound of the inequality constraints. | |

| void | set_g_ub (const VectorXs &g_ub) |

| Modify the upper bound of the inequality constraints. | |

| void | set_u_lb (const VectorXs &u_lb) |

| Modify the control lower bounds. | |

| void | set_u_ub (const VectorXs &u_ub) |

| Modify the control upper bounds. | |

Static Public Member Functions | |

| static ActionModelLQRTpl | Random (const std::size_t nx, const std::size_t nu, const std::size_t ng=0, const std::size_t nh=0) |

| Create a random LQR model. | |

Public Attributes | |

| EIGEN_MAKE_ALIGNED_OPERATOR_NEW typedef _Scalar | Scalar |

Public Attributes inherited from ActionModelAbstractTpl< _Scalar > Public Attributes inherited from ActionModelAbstractTpl< _Scalar > | |

| EIGEN_MAKE_ALIGNED_OPERATOR_NEW typedef _Scalar | Scalar |

Protected Attributes | |

| std::size_t | ng_ |

| Number of inequality constraints. | |

| std::size_t | nh_ |

| < Equality constraint dimension | |

| std::size_t | nu_ |

| < Inequality constraint dimension | |

| std::shared_ptr< StateAbstract > | state_ |

| < Control dimension | |

Protected Attributes inherited from ActionModelAbstractTpl< _Scalar > Protected Attributes inherited from ActionModelAbstractTpl< _Scalar > | |

| VectorXs | g_lb_ |

| Lower bound of the inequality constraints. | |

| VectorXs | g_ub_ |

| Lower bound of the inequality constraints. | |

| bool | has_control_limits_ |

| std::size_t | ng_ |

| Number of inequality constraints. | |

| std::size_t | ng_T_ |

| Number of inequality terminal constraints. | |

| std::size_t | nh_ |

| Number of equality constraints. | |

| std::size_t | nh_T_ |

| Number of equality terminal constraints. | |

| std::size_t | nr_ |

| Dimension of the cost residual. | |

| std::size_t | nu_ |

| Control dimension. | |

| std::shared_ptr< StateAbstract > | state_ |

| Model of the state. | |

| VectorXs | u_lb_ |

| Lower control limits. | |

| VectorXs | u_ub_ |

| Upper control limits. | |

| VectorXs | unone_ |

| Neutral state. | |

Additional Inherited Members | |

Protected Member Functions inherited from ActionModelAbstractTpl< _Scalar > Protected Member Functions inherited from ActionModelAbstractTpl< _Scalar > | |

| void | update_has_control_limits () |

| Update the status of the control limits (i.e. if there are defined limits) | |

Linear-quadratic regulator (LQR) action model.

A linear-quadratic regulator (LQR) action has a transition model of the form

\[ \begin{equation} \mathbf{x}^' = \mathbf{A x + B u + f}. \end{equation} \]

Its cost function is quadratic of the form:

\[ \begin{equation} \ell(\mathbf{x},\mathbf{u}) = \begin{bmatrix}1 \\ \mathbf{x} \\ \mathbf{u}\end{bmatrix}^T \begin{bmatrix}0 & \mathbf{q}^T & \mathbf{r}^T \\ \mathbf{q} & \mathbf{Q} & \mathbf{N}^T \\ \mathbf{r} & \mathbf{N} & \mathbf{R}\end{bmatrix} \begin{bmatrix}1 \\ \mathbf{x} \\ \mathbf{u}\end{bmatrix} \end{equation} \]

and the linear equality and inequality constraints has the form:

\[ \begin{aligned} \mathbf{g(x,u)} = \mathbf{G}\begin{bmatrix} \mathbf{x} \\ \mathbf{u} \end{bmatrix} [x,u] + \mathbf{g} \leq \mathbf{0} &\mathbf{h(x,u)} = \mathbf{H}\begin{bmatrix} \mathbf{x} \\ \mathbf{u} \end{bmatrix} [x,u] + \mathbf{h} \end{aligned} \]

| typedef ActionDataAbstractTpl<Scalar> ActionDataAbstract |

| typedef ActionModelAbstractTpl<Scalar> Base |

| typedef ActionDataLQRTpl<Scalar> Data |

| typedef StateVectorTpl<Scalar> StateVector |

| typedef MathBaseTpl<Scalar> MathBase |

| typedef MathBase::VectorXs VectorXs |

| typedef MathBase::MatrixXs MatrixXs |

| ActionModelLQRTpl | ( | const MatrixXs & | A, |

| const MatrixXs & | B, | ||

| const MatrixXs & | Q, | ||

| const MatrixXs & | R, | ||

| const MatrixXs & | N | ||

| ) |

Initialize the LQR action model.

| [in] | A | State matrix |

| [in] | B | Input matrix |

| [in] | Q | State weight matrix |

| [in] | R | Input weight matrix |

| [in] | N | State-input weight matrix |

| ActionModelLQRTpl | ( | const MatrixXs & | A, |

| const MatrixXs & | B, | ||

| const MatrixXs & | Q, | ||

| const MatrixXs & | R, | ||

| const MatrixXs & | N, | ||

| const VectorXs & | f, | ||

| const VectorXs & | q, | ||

| const VectorXs & | r | ||

| ) |

Initialize the LQR action model.

| [in] | A | State matrix |

| [in] | B | Input matrix |

| [in] | Q | State weight matrix |

| [in] | R | Input weight matrix |

| [in] | N | State-input weight matrix |

| [in] | f | Dynamics drift |

| [in] | q | State weight vector |

| [in] | r | Input weight vector |

| ActionModelLQRTpl | ( | const MatrixXs & | A, |

| const MatrixXs & | B, | ||

| const MatrixXs & | Q, | ||

| const MatrixXs & | R, | ||

| const MatrixXs & | N, | ||

| const MatrixXs & | G, | ||

| const MatrixXs & | H, | ||

| const VectorXs & | f, | ||

| const VectorXs & | q, | ||

| const VectorXs & | r, | ||

| const VectorXs & | g, | ||

| const VectorXs & | h | ||

| ) |

Initialize the LQR action model.

| [in] | A | State matrix |

| [in] | B | Input matrix |

| [in] | Q | State weight matrix |

| [in] | R | Input weight matrix |

| [in] | N | State-input weight matrix |

| [in] | G | State-input inequality constraint matrix |

| [in] | H | State-input equality constraint matrix |

| [in] | f | Dynamics drift |

| [in] | q | State weight vector |

| [in] | r | Input weight vector |

| [in] | g | State-input inequality constraint bias |

| [in] | h | State-input equality constraint bias |

| ActionModelLQRTpl | ( | const std::size_t | nx, |

| const std::size_t | nu, | ||

| const bool | drift_free = true |

||

| ) |

Initialize the LQR action model.

| [in] | nx | Dimension of state vector |

| [in] | nu | Dimension of control vector |

| [in] | drif_free | Enable / disable the bias term of the linear dynamics (default true) |

|

overridevirtual |

Compute the next state and cost value.

| [in] | data | Action data |

| [in] | x | State point \(\mathbf{x}\in\mathbb{R}^{ndx}\) |

| [in] | u | Control input \(\mathbf{u}\in\mathbb{R}^{nu}\) |

Implements ActionModelAbstractTpl< _Scalar >.

|

overridevirtual |

Compute the total cost value for nodes that depends only on the state.

It updates the total cost and the next state is not computed as it is not expected to change. This function is used in the terminal nodes of an optimal control problem.

| [in] | data | Action data |

| [in] | x | State point \(\mathbf{x}\in\mathbb{R}^{ndx}\) |

Reimplemented from ActionModelAbstractTpl< _Scalar >.

|

overridevirtual |

Compute the derivatives of the dynamics and cost functions.

It computes the partial derivatives of the dynamical system and the cost function. It assumes that calc() has been run first. This function builds a linear-quadratic approximation of the action model (i.e. dynamical system and cost function).

| [in] | data | Action data |

| [in] | x | State point \(\mathbf{x}\in\mathbb{R}^{ndx}\) |

| [in] | u | Control input \(\mathbf{u}\in\mathbb{R}^{nu}\) |

Implements ActionModelAbstractTpl< _Scalar >.

|

overridevirtual |

Compute the derivatives of the cost functions with respect to the state only.

It updates the derivatives of the cost function with respect to the state only. This function is used in the terminal nodes of an optimal control problem.

| [in] | data | Action data |

| [in] | x | State point \(\mathbf{x}\in\mathbb{R}^{ndx}\) |

Reimplemented from ActionModelAbstractTpl< _Scalar >.

|

overridevirtual |

Create the action data.

Reimplemented from ActionModelAbstractTpl< _Scalar >.

| ActionModelLQRTpl< NewScalar > cast | ( | ) | const |

Cast the LQR model to a different scalar type.

It is useful for operations requiring different precision or scalar types.

| NewScalar | The new scalar type to cast to. |

|

overridevirtual |

Checks that a specific data belongs to this model.

Reimplemented from ActionModelAbstractTpl< _Scalar >.

|

static |

Create a random LQR model.

| [in] | nx | State dimension |

| [in] | nu | Control dimension |

| [in] | ng | Inequality constraint dimension (default 0) |

| [in] | nh | Equality constraint dimension (defaul 0) |

| void set_LQR | ( | const MatrixXs & | A, |

| const MatrixXs & | B, | ||

| const MatrixXs & | Q, | ||

| const MatrixXs & | R, | ||

| const MatrixXs & | N, | ||

| const MatrixXs & | G, | ||

| const MatrixXs & | H, | ||

| const VectorXs & | f, | ||

| const VectorXs & | q, | ||

| const VectorXs & | r, | ||

| const VectorXs & | g, | ||

| const VectorXs & | h | ||

| ) |

Modify the LQR action model.

| [in] | A | State matrix |

| [in] | B | Input matrix |

| [in] | Q | State weight matrix |

| [in] | R | Input weight matrix |

| [in] | N | State-input weight matrix |

| [in] | G | State-input inequality constraint matrix |

| [in] | H | State-input equality constraint matrix |

| [in] | f | Dynamics drift |

| [in] | q | State weight vector |

| [in] | r | Input weight vector |

| [in] | g | State-input inequality constraint bias |

| [in] | h | State-input equality constraint bias |

|

inline |

|

inline |

|

inline |

|

inline |

|

inline |

|

inline |

|

inline |

|

inline |

|

overridevirtual |

Print relevant information of the LQR model.

| [out] | os | Output stream object |

Reimplemented from ActionModelAbstractTpl< _Scalar >.

| EIGEN_MAKE_ALIGNED_OPERATOR_NEW typedef _Scalar Scalar |

|

protected |

Number of inequality constraints.

Definition at line 330 of file action-base.hpp.

|

protected |

< Equality constraint dimension

Definition at line 331 of file action-base.hpp.

|

protected |

< Inequality constraint dimension

Definition at line 328 of file action-base.hpp.

|

protected |

< Control dimension

Definition at line 334 of file action-base.hpp.