Abstract class for optimal control solvers. More...

#include <solver-base.hpp>

Public Member Functions | |

| EIGEN_MAKE_ALIGNED_OPERATOR_NEW | SolverAbstract (std::shared_ptr< ShootingProblem > problem) |

| Initialize the solver. | |

| virtual void | computeDirection (const bool recalc)=0 |

| Compute the search direction \((\delta\mathbf{x}^k,\delta\mathbf{u}^k)\) for the current guess \((\mathbf{x}^k_s,\mathbf{u}^k_s)\). | |

| double | computeDynamicFeasibility () |

| Compute the dynamic feasibility \(\|\mathbf{f}_{\mathbf{s}}\|_{\infty,1}\) for the current guess \((\mathbf{x}^k,\mathbf{u}^k)\). | |

| double | computeEqualityFeasibility () |

| Compute the feasibility of the equality constraints for the current guess. | |

| double | computeInequalityFeasibility () |

| Compute the feasibility of the inequality constraints for the current guess. | |

| DEPRECATED ("Use get_preg for primal-variable regularization", double get_xreg() const ;) DEPRECATED("Use get_preg for primal-variable regularization" | |

| DEPRECATED ("Use set_preg for primal-variable regularization", void set_xreg(const double xreg);) DEPRECATED("Use set_preg for primal-variable regularization" | |

| virtual const Eigen::Vector2d & | expectedImprovement ()=0 |

| Return the expected improvement \(dV_{exp}\) from a given current search direction \((\delta\mathbf{x}^k,\delta\mathbf{u}^k)\). | |

| double | get_cost () const |

| Return the cost for the current guess. | |

| const Eigen::Vector2d & | get_d () const |

| Return the linear and quadratic terms of the expected improvement. | |

| double | get_dfeas () const |

| Return the reduction in the feasibility. | |

| double | get_dPhi () const |

| Return the reduction in the merit function \(\Delta\Phi\). | |

| double | get_dPhiexp () const |

| Return the expected reduction in the merit function \(\Delta\Phi_{exp}\). | |

| double | get_dreg () const |

| Return the dual-variable regularization. | |

| double | get_dV () const |

| Return the reduction in the cost function \(\Delta V\). | |

| double | get_dVexp () const |

| Return the expected reduction in the cost function \(\Delta

V_{exp}\). | |

| double | get_feas () const |

| Return the total feasibility for the current guess. | |

| FeasibilityNorm | get_feasnorm () const |

| Return the type of norm used to evaluate the dynamic and constraints feasibility. | |

| double | get_ffeas () const |

| Return the dynamic feasibility for the current guess. | |

| double | get_ffeas_try () const |

| Return the dynamic feasibility for the current step length. | |

| const std::vector< Eigen::VectorXd > & | get_fs () const |

| Return the dynamic infeasibility \(\mathbf{f}_{s}\). | |

| double | get_gfeas () const |

| Return the inequality feasibility for the current guess. | |

| double | get_gfeas_try () const |

| Return the inequality feasibility for the current step length. | |

| double | get_hfeas () const |

| Return the equality feasibility for the current guess. | |

| double | get_hfeas_try () const |

| Return the equality feasibility for the current step length. | |

| bool | get_is_feasible () const |

| Return the feasibility status of the \((\mathbf{x}_s,\mathbf{u}_s)\) trajectory. | |

| std::size_t | get_iter () const |

| Return the number of iterations performed by the solver. | |

| double | get_merit () const |

| Return the merit for the current guess. | |

| double | get_preg () const |

| Return the primal-variable regularization. | |

| const std::shared_ptr< ShootingProblem > & | get_problem () const |

| Return the shooting problem. | |

| double | get_steplength () const |

| Return the step length \(\alpha\). | |

| double | get_stop () const |

Return the stopping-criteria value computed by stoppingCriteria() | |

| double | get_th_acceptstep () const |

| Return the threshold used for accepting a step. | |

| double | get_th_gaptol () const |

| Return the threshold for accepting a gap as non-zero. | |

| double | get_th_stop () const |

| Return the tolerance for stopping the algorithm. | |

| double | get_ureg () const |

| const std::vector< Eigen::VectorXd > & | get_us () const |

| Return the control trajectory \(\mathbf{u}_s\). | |

| const std::vector< Eigen::VectorXd > & | get_xs () const |

| Return the state trajectory \(\mathbf{x}_s\). | |

| const std::vector< std::shared_ptr< CallbackAbstract > > & | getCallbacks () const |

| Return the list of callback functions using for diagnostic. | |

| virtual void | resizeData () |

| Resizing the solver data. | |

| void | set_dreg (const double dreg) |

| Modify the dual-variable regularization value. | |

| void | set_feasnorm (const FeasibilityNorm feas_norm) |

| Modify the current norm used for computed the dynamic and constraint feasibility. | |

| void | set_preg (const double preg) |

| Modify the primal-variable regularization value. | |

| void | set_th_acceptstep (const double th_acceptstep) |

| Modify the threshold used for accepting step. | |

| void | set_th_gaptol (const double th_gaptol) |

| Modify the threshold for accepting a gap as non-zero. | |

| void | set_th_stop (const double th_stop) |

| Modify the tolerance for stopping the algorithm. | |

| void | set_ureg (const double ureg) |

| void | set_us (const std::vector< Eigen::VectorXd > &us) |

| Modify the control trajectory \(\mathbf{u}_s\). | |

| void | set_xs (const std::vector< Eigen::VectorXd > &xs) |

| Modify the state trajectory \(\mathbf{x}_s\). | |

| void | setCallbacks (const std::vector< std::shared_ptr< CallbackAbstract > > &callbacks) |

| Set a list of callback functions using for the solver diagnostic. | |

| void | setCandidate (const std::vector< Eigen::VectorXd > &xs_warm=DEFAULT_VECTOR, const std::vector< Eigen::VectorXd > &us_warm=DEFAULT_VECTOR, const bool is_feasible=false) |

| Set the solver candidate trajectories \((\mathbf{x}_s,\mathbf{u}_s)\). | |

| virtual bool | solve (const std::vector< Eigen::VectorXd > &init_xs=DEFAULT_VECTOR, const std::vector< Eigen::VectorXd > &init_us=DEFAULT_VECTOR, const std::size_t maxiter=100, const bool is_feasible=false, const double reg_init=NAN)=0 |

| Compute the optimal trajectory \(\mathbf{x}^*_s,\mathbf{u}^*_s\) as lists of \(T+1\) and \(T\) terms. | |

| virtual double | stoppingCriteria ()=0 |

| Return a positive value that quantifies the algorithm termination. | |

| virtual double | tryStep (const double steplength=1)=0 |

| Try a predefined step length \(\alpha\) and compute its cost improvement \(dV\). | |

Protected Member Functions | |

| DEPRECATED ("Use preg_ for primal-variable regularization", double xreg_;) DEPRECATED("Use dreg_ for primal-variable regularization" | |

Protected Attributes | |

| std::vector< std::shared_ptr< CallbackAbstract > > | callbacks_ |

| Callback functions. | |

| double | cost_ |

| Cost for the current guess. | |

| Eigen::Vector2d | d_ |

| LQ approximation of the expected improvement. | |

| double | dfeas_ |

| Reduction in the feasibility. | |

| double | dPhi_ |

Reduction in the merit function computed by tryStep() | |

| double | dPhiexp_ |

| Expected reduction in the merit function. | |

| double | dreg_ |

| Current dual-variable regularization value. | |

| double | dV_ |

Reduction in the cost function computed by tryStep() | |

| double | dVexp_ |

| Expected reduction in the cost function. | |

| double | feas_ |

| Total feasibility for the current guess. | |

| enum FeasibilityNorm | feasnorm_ |

| double | ffeas_ |

| Feasibility of the dynamic constraints for the current guess. | |

| double | ffeas_try_ |

| std::vector< Eigen::VectorXd > | fs_ |

| Gaps/defects between shooting nodes. | |

| std::vector< Eigen::VectorXd > | g_adj_ |

| Adjusted inequality bound. | |

| double | gfeas_ |

| double | gfeas_try_ |

| double | hfeas_ |

| double | hfeas_try_ |

| bool | is_feasible_ |

| Label that indicates is the iteration is feasible. | |

| std::size_t | iter_ |

| Number of iteration performed by the solver. | |

| double | merit_ |

| Merit for the current guess. | |

| double | preg_ |

| Current primal-variable regularization value. | |

| std::shared_ptr< ShootingProblem > | problem_ |

| optimal control problem | |

| double | steplength_ |

| < Current control regularization values | |

| double | stop_ |

Value computed by stoppingCriteria() | |

| double | th_acceptstep_ |

| Threshold used for accepting step. | |

| double | th_gaptol_ |

| Threshold limit to check non-zero gaps. | |

| double | th_stop_ |

| Tolerance for stopping the algorithm. | |

| double | tmp_feas_ |

| Temporal variables used for computed the feasibility. | |

| double | ureg_ |

| std::vector< Eigen::VectorXd > | us_ |

| Control trajectory. | |

| bool | was_feasible_ |

| std::vector< Eigen::VectorXd > | xs_ |

| State trajectory. | |

Abstract class for optimal control solvers.

A solver resolves an optimal control solver of the form

\begin{eqnarray*} \begin{Bmatrix} \mathbf{x}^*_0,\cdots,\mathbf{x}^*_{T} \\ \mathbf{u}^*_0,\cdots,\mathbf{u}^*_{T-1} \end{Bmatrix} = \arg\min_{\mathbf{x}_s,\mathbf{u}_s} && l_T (\mathbf{x}_T) + \sum_{k=0}^{T-1} l_k(\mathbf{x}_t,\mathbf{u}_t) \\ \operatorname{subject}\,\operatorname{to} && \mathbf{x}_0 = \mathbf{\tilde{x}}_0\\ && \mathbf{x}_{k+1} = \mathbf{f}_k(\mathbf{x}_k,\mathbf{u}_k)\\ && \mathbf{x}_k\in\mathcal{X}, \mathbf{u}_k\in\mathcal{U} \end{eqnarray*}

where \(l_T(\mathbf{x}_T)\), \(l_k(\mathbf{x}_t,\mathbf{u}_t)\) are the terminal and running cost functions, respectively, \(\mathbf{f}_k(\mathbf{x}_k,\mathbf{u}_k)\) describes evolution of the system, and state and control admissible sets are defined by \(\mathbf{x}_k\in\mathcal{X}\), \(\mathbf{u}_k\in\mathcal{U}\). An action model, defined in the shooting problem, describes each node \(k\). Inside the action model, we specialize the cost functions, the system evolution and the admissible sets.

The main routines are computeDirection() and tryStep(). The former finds a search direction and typically computes the derivatives of each action model. The latter rollout the dynamics and cost (i.e., the action) to try the search direction found by computeDirection. Both functions used the current guess defined by setCandidate(). Finally, solve() function is used to define when the search direction and length are computed in each iterate. It also describes the globalization strategy (i.e., regularization) of the numerical optimization.

solve(), computeDirection(), tryStep(), stoppingCriteria() Definition at line 61 of file solver-base.hpp.

|

explicit |

Initialize the solver.

| [in] | problem | shooting problem |

Definition at line 19 of file solver-base.cpp.

|

virtual |

Definition at line 71 of file solver-base.cpp.

|

pure virtual |

Compute the optimal trajectory \(\mathbf{x}^*_s,\mathbf{u}^*_s\) as lists of \(T+1\) and \(T\) terms.

From an initial guess init_xs, init_us (feasible or not), iterate over computeDirection() and tryStep() until stoppingCriteria() is below threshold. It also describes the globalization strategy used during the numerical optimization.

| [in] | init_xs | initial guess for state trajectory with \(T+1\) elements (default []) |

| [in] | init_us | initial guess for control trajectory with \(T\) elements (default []) |

| [in] | maxiter | maximum allowed number of iterations (default 100) |

| [in] | is_feasible | true if the init_xs are obtained from integrating the init_us (rollout) (default false) |

| [in] | init_reg | initial guess for the regularization value. Very low values are typical used with very good guess points (default 1e-9). |

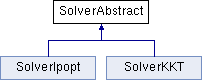

Implemented in SolverIpopt, and SolverKKT.

|

pure virtual |

Compute the search direction \((\delta\mathbf{x}^k,\delta\mathbf{u}^k)\) for the current guess \((\mathbf{x}^k_s,\mathbf{u}^k_s)\).

You must call setCandidate() first in order to define the current guess. A current guess defines a state and control trajectory \((\mathbf{x}^k_s,\mathbf{u}^k_s)\) of \(T+1\) and \(T\) elements, respectively.

| [in] | recalc | true for recalculating the derivatives at current state and control |

Implemented in SolverKKT.

|

pure virtual |

Try a predefined step length \(\alpha\) and compute its cost improvement \(dV\).

It uses the search direction found by computeDirection() to try a determined step length \(\alpha\). Therefore, it assumes that we have run computeDirection() first. Additionally, it returns the cost improvement \(dV\) along the predefined step length \(\alpha\).

| [in] | steplength | applied step length ( \(0\leq\alpha\leq1\)) |

Implemented in SolverKKT.

|

pure virtual |

Return a positive value that quantifies the algorithm termination.

These values typically represents the gradient norm which tell us that it's been reached the local minima. The stopping criteria strictly speaking depends on the search direction (calculated by computeDirection()) but it could also depend on the chosen step length, tested by tryStep().

Implemented in SolverKKT.

|

pure virtual |

Return the expected improvement \(dV_{exp}\) from a given current search direction \((\delta\mathbf{x}^k,\delta\mathbf{u}^k)\).

For computing the expected improvement, you need to compute the search direction first via computeDirection().

Implemented in SolverKKT.

|

virtual |

Resizing the solver data.

If the shooting problem has changed after construction, then this function resizes all the data before starting resolve the problem.

Reimplemented in SolverIpopt.

Definition at line 73 of file solver-base.cpp.

| double computeDynamicFeasibility | ( | ) |

Compute the dynamic feasibility \(\|\mathbf{f}_{\mathbf{s}}\|_{\infty,1}\) for the current guess \((\mathbf{x}^k,\mathbf{u}^k)\).

The feasibility can be computed using different norms (e.g, \(\ell_\infty\) or \(\ell_1\) norms). By default we use the \(\ell_\infty\) norm, however, we can change the type of norm using set_feasnorm. Note that \(\mathbf{f}_{\mathbf{s}}\) are the gaps on the dynamics, which are computed at each node as \(\mathbf{x}^{'}-\mathbf{f}(\mathbf{x},\mathbf{u})\).

Definition at line 92 of file solver-base.cpp.

| double computeInequalityFeasibility | ( | ) |

Compute the feasibility of the inequality constraints for the current guess.

The feasibility can be computed using different norms (e.g, \(\ell_\infty\) or \(\ell_1\) norms). By default we use the \(\ell_\infty\) norm, however, we can change the type of norm using set_feasnorm.

Definition at line 127 of file solver-base.cpp.

| double computeEqualityFeasibility | ( | ) |

Compute the feasibility of the equality constraints for the current guess.

The feasibility can be computed using different norms (e.g, \(\ell_\infty\) or \(\ell_1\) norms). By default we use the \(\ell_\infty\) norm, however, we can change the type of norm using set_feasnorm.

Definition at line 178 of file solver-base.cpp.

| void setCandidate | ( | const std::vector< Eigen::VectorXd > & | xs_warm = DEFAULT_VECTOR, |

| const std::vector< Eigen::VectorXd > & | us_warm = DEFAULT_VECTOR, |

||

| const bool | is_feasible = false |

||

| ) |

Set the solver candidate trajectories \((\mathbf{x}_s,\mathbf{u}_s)\).

The solver candidates are defined as a state and control trajectories \((\mathbf{x}_s,\mathbf{u}_s)\) of \(T+1\) and \(T\) elements, respectively. Additionally, we need to define the dynamic feasibility of the \((\mathbf{x}_s,\mathbf{u}_s)\) pair. Note that the trajectories are feasible if \(\mathbf{x}_s\) is the resulting trajectory from the system rollout with \(\mathbf{u}_s\) inputs.

| [in] | xs | state trajectory of \(T+1\) elements (default []) |

| [in] | us | control trajectory of \(T\) elements (default []) |

| [in] | isFeasible | true if the xs are obtained from integrating the us (rollout) |

Definition at line 213 of file solver-base.cpp.

| void setCallbacks | ( | const std::vector< std::shared_ptr< CallbackAbstract > > & | callbacks | ) |

Set a list of callback functions using for the solver diagnostic.

Each iteration, the solver calls these set of functions in order to allowed user the diagnostic of its performance.

| callbacks | set of callback functions |

Definition at line 285 of file solver-base.cpp.

| const std::vector< std::shared_ptr< CallbackAbstract > > & getCallbacks | ( | ) | const |

Return the list of callback functions using for diagnostic.

Definition at line 291 of file solver-base.cpp.

| const std::shared_ptr< ShootingProblem > & get_problem | ( | ) | const |

Return the shooting problem.

Definition at line 295 of file solver-base.cpp.

| const std::vector< Eigen::VectorXd > & get_xs | ( | ) | const |

Return the state trajectory \(\mathbf{x}_s\).

Definition at line 299 of file solver-base.cpp.

| const std::vector< Eigen::VectorXd > & get_us | ( | ) | const |

Return the control trajectory \(\mathbf{u}_s\).

Definition at line 303 of file solver-base.cpp.

| const std::vector< Eigen::VectorXd > & get_fs | ( | ) | const |

Return the dynamic infeasibility \(\mathbf{f}_{s}\).

Definition at line 307 of file solver-base.cpp.

| bool get_is_feasible | ( | ) | const |

Return the feasibility status of the \((\mathbf{x}_s,\mathbf{u}_s)\) trajectory.

Definition at line 311 of file solver-base.cpp.

| double get_cost | ( | ) | const |

Return the cost for the current guess.

Definition at line 313 of file solver-base.cpp.

| double get_merit | ( | ) | const |

Return the merit for the current guess.

Definition at line 315 of file solver-base.cpp.

| double get_stop | ( | ) | const |

Return the stopping-criteria value computed by stoppingCriteria()

Definition at line 317 of file solver-base.cpp.

| const Eigen::Vector2d & get_d | ( | ) | const |

Return the linear and quadratic terms of the expected improvement.

Definition at line 319 of file solver-base.cpp.

| double get_dV | ( | ) | const |

Return the reduction in the cost function \(\Delta V\).

Definition at line 321 of file solver-base.cpp.

| double get_dPhi | ( | ) | const |

Return the reduction in the merit function \(\Delta\Phi\).

Definition at line 323 of file solver-base.cpp.

| double get_dVexp | ( | ) | const |

Return the expected reduction in the cost function \(\Delta V_{exp}\).

Definition at line 325 of file solver-base.cpp.

| double get_dPhiexp | ( | ) | const |

Return the expected reduction in the merit function \(\Delta\Phi_{exp}\).

Definition at line 327 of file solver-base.cpp.

| double get_dfeas | ( | ) | const |

Return the reduction in the feasibility.

Definition at line 329 of file solver-base.cpp.

| double get_feas | ( | ) | const |

Return the total feasibility for the current guess.

Definition at line 331 of file solver-base.cpp.

| double get_ffeas | ( | ) | const |

Return the dynamic feasibility for the current guess.

Definition at line 333 of file solver-base.cpp.

| double get_gfeas | ( | ) | const |

Return the inequality feasibility for the current guess.

Definition at line 335 of file solver-base.cpp.

| double get_hfeas | ( | ) | const |

Return the equality feasibility for the current guess.

Definition at line 337 of file solver-base.cpp.

| double get_ffeas_try | ( | ) | const |

Return the dynamic feasibility for the current step length.

Definition at line 339 of file solver-base.cpp.

| double get_gfeas_try | ( | ) | const |

Return the inequality feasibility for the current step length.

Definition at line 341 of file solver-base.cpp.

| double get_hfeas_try | ( | ) | const |

Return the equality feasibility for the current step length.

Definition at line 343 of file solver-base.cpp.

| double get_preg | ( | ) | const |

Return the primal-variable regularization.

Definition at line 345 of file solver-base.cpp.

| double get_dreg | ( | ) | const |

Return the dual-variable regularization.

Definition at line 347 of file solver-base.cpp.

| double get_ureg | ( | ) | const |

Definition at line 355 of file solver-base.cpp.

| double get_steplength | ( | ) | const |

Return the step length \(\alpha\).

Definition at line 357 of file solver-base.cpp.

| double get_th_acceptstep | ( | ) | const |

Return the threshold used for accepting a step.

Definition at line 359 of file solver-base.cpp.

| double get_th_stop | ( | ) | const |

Return the tolerance for stopping the algorithm.

Definition at line 361 of file solver-base.cpp.

| double get_th_gaptol | ( | ) | const |

Return the threshold for accepting a gap as non-zero.

Definition at line 363 of file solver-base.cpp.

| FeasibilityNorm get_feasnorm | ( | ) | const |

Return the type of norm used to evaluate the dynamic and constraints feasibility.

Definition at line 365 of file solver-base.cpp.

| std::size_t get_iter | ( | ) | const |

Return the number of iterations performed by the solver.

Definition at line 367 of file solver-base.cpp.

| void set_xs | ( | const std::vector< Eigen::VectorXd > & | xs | ) |

Modify the state trajectory \(\mathbf{x}_s\).

Definition at line 369 of file solver-base.cpp.

| void set_us | ( | const std::vector< Eigen::VectorXd > & | us | ) |

Modify the control trajectory \(\mathbf{u}_s\).

Definition at line 395 of file solver-base.cpp.

| void set_preg | ( | const double | preg | ) |

Modify the primal-variable regularization value.

Definition at line 417 of file solver-base.cpp.

| void set_dreg | ( | const double | dreg | ) |

Modify the dual-variable regularization value.

Definition at line 424 of file solver-base.cpp.

| void set_ureg | ( | const double | ureg | ) |

Definition at line 443 of file solver-base.cpp.

| void set_th_acceptstep | ( | const double | th_acceptstep | ) |

Modify the threshold used for accepting step.

Definition at line 451 of file solver-base.cpp.

| void set_th_stop | ( | const double | th_stop | ) |

Modify the tolerance for stopping the algorithm.

Definition at line 459 of file solver-base.cpp.

| void set_th_gaptol | ( | const double | th_gaptol | ) |

Modify the threshold for accepting a gap as non-zero.

Definition at line 466 of file solver-base.cpp.

| void set_feasnorm | ( | const FeasibilityNorm | feas_norm | ) |

Modify the current norm used for computed the dynamic and constraint feasibility.

Definition at line 473 of file solver-base.cpp.

|

protected |

optimal control problem

Definition at line 432 of file solver-base.hpp.

|

protected |

State trajectory.

Definition at line 433 of file solver-base.hpp.

|

protected |

Control trajectory.

Definition at line 434 of file solver-base.hpp.

|

protected |

Gaps/defects between shooting nodes.

Definition at line 435 of file solver-base.hpp.

|

protected |

Callback functions.

Definition at line 437 of file solver-base.hpp.

|

protected |

Label that indicates is the iteration is feasible.

Definition at line 438 of file solver-base.hpp.

|

protected |

Label that indicates in the previous iterate was feasible

Definition at line 439 of file solver-base.hpp.

|

protected |

Cost for the current guess.

Definition at line 441 of file solver-base.hpp.

|

protected |

Merit for the current guess.

Definition at line 442 of file solver-base.hpp.

|

protected |

Value computed by stoppingCriteria()

Definition at line 443 of file solver-base.hpp.

|

protected |

LQ approximation of the expected improvement.

Definition at line 444 of file solver-base.hpp.

|

protected |

Reduction in the cost function computed by tryStep()

Definition at line 445 of file solver-base.hpp.

|

protected |

Reduction in the merit function computed by tryStep()

Definition at line 446 of file solver-base.hpp.

|

protected |

Expected reduction in the cost function.

Definition at line 447 of file solver-base.hpp.

|

protected |

Expected reduction in the merit function.

Definition at line 448 of file solver-base.hpp.

|

protected |

Reduction in the feasibility.

Definition at line 449 of file solver-base.hpp.

|

protected |

Total feasibility for the current guess.

Definition at line 450 of file solver-base.hpp.

|

protected |

Feasibility of the dynamic constraints for the current guess.

Definition at line 452 of file solver-base.hpp.

|

protected |

Feasibility of the inequality constraints for the current guess

Definition at line 453 of file solver-base.hpp.

|

protected |

Feasibility of the equality constraints for the current guess

Definition at line 455 of file solver-base.hpp.

|

protected |

Feasibility of the dynamic constraints evaluated for the current step length

Definition at line 457 of file solver-base.hpp.

|

protected |

Feasibility of the inequality constraints evaluated for the current step length

Definition at line 459 of file solver-base.hpp.

|

protected |

Feasibility of the equality constraints evaluated for the current step length

Definition at line 461 of file solver-base.hpp.

|

protected |

Current primal-variable regularization value.

Definition at line 463 of file solver-base.hpp.

|

protected |

Current dual-variable regularization value.

Definition at line 464 of file solver-base.hpp.

|

protected |

Definition at line 468 of file solver-base.hpp.

|

protected |

< Current control regularization values

Current applied step length

Definition at line 469 of file solver-base.hpp.

|

protected |

Threshold used for accepting step.

Definition at line 470 of file solver-base.hpp.

|

protected |

Tolerance for stopping the algorithm.

Definition at line 471 of file solver-base.hpp.

|

protected |

Threshold limit to check non-zero gaps.

Definition at line 472 of file solver-base.hpp.

|

protected |

Type of norm used to evaluate the dynamics and constraints feasibility

Definition at line 473 of file solver-base.hpp.

|

protected |

Number of iteration performed by the solver.

Definition at line 475 of file solver-base.hpp.

|

protected |

Temporal variables used for computed the feasibility.

Definition at line 476 of file solver-base.hpp.

|

protected |

Adjusted inequality bound.

Definition at line 477 of file solver-base.hpp.