|

| EIGEN_MAKE_ALIGNED_OPERATOR_NEW | SolverFDDP (std::shared_ptr< ShootingProblem > problem) |

| | Initialize the FDDP solver.

|

| |

| virtual const Eigen::Vector2d & | expectedImprovement () |

| | Return the expected improvement \(dV_{exp}\) from a given current search direction \((\delta\mathbf{x}^k,\delta\mathbf{u}^k)\).

|

| |

| virtual void | forwardPass (const double stepLength) |

| |

| double | get_th_acceptnegstep () const |

| | Return the threshold used for accepting step along ascent direction.

|

| |

| void | set_th_acceptnegstep (const double th_acceptnegstep) |

| | Modify the threshold used for accepting step along ascent direction.

|

| |

| virtual bool | solve (const std::vector< Eigen::VectorXd > &init_xs=DEFAULT_VECTOR, const std::vector< Eigen::VectorXd > &init_us=DEFAULT_VECTOR, const std::size_t maxiter=100, const bool is_feasible=false, const double init_reg=NAN) |

| |

| void | updateExpectedImprovement () |

| | Update internal values for computing the expected improvement.

|

| |

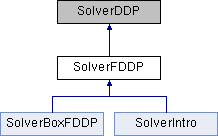

Feasibility-driven Differential Dynamic Programming (FDDP) solver.

The FDDP solver computes an optimal trajectory and control commands by iterates running backwardPass() and forwardPass(). The backward pass accepts infeasible guess as described in the SolverDDP::backwardPass(). Additionally, the forward pass handles infeasibility simulations that resembles the numerical behaviour of a multiple-shooting formulation, i.e.:

\begin{eqnarray}

\mathbf{\hat{x}}_0 &=& \mathbf{\tilde{x}}_0 - (1 -

\alpha)\mathbf{\bar{f}}_0,\\

\mathbf{\hat{u}}_k &=& \mathbf{u}_k + \alpha\mathbf{k}_k +

\mathbf{K}_k(\mathbf{\hat{x}}_k-\mathbf{x}_k),\\ \mathbf{\hat{x}}_{k+1} &=&

\mathbf{f}_k(\mathbf{\hat{x}}_k,\mathbf{\hat{u}}_k) - (1 -

\alpha)\mathbf{\bar{f}}_{k+1}.

\end{eqnarray}

Note that the forward pass keeps the gaps \(\mathbf{\bar{f}}_s\) open according to the step length \(\alpha\) that has been accepted. This solver has shown empirically greater globalization strategy. Additionally, the expected improvement computation considers the gaps in the dynamics:

\begin{equation}

\Delta J(\alpha) = \Delta_1\alpha + \frac{1}{2}\Delta_2\alpha^2,

\end{equation}

with

\begin{eqnarray}

\Delta_1 = \sum_{k=0}^{N-1} \mathbf{k}_k^\top\mathbf{Q}_{\mathbf{u}_k}

+\mathbf{\bar{f}}_k^\top(V_{\mathbf{x}_k} -

V_{\mathbf{xx}_k}\mathbf{x}_k),\nonumber\\ \Delta_2 = \sum_{k=0}^{N-1}

\mathbf{k}_k^\top\mathbf{Q}_{\mathbf{uu}_k}\mathbf{k}_k +

\mathbf{\bar{f}}_k^\top(2 V_{\mathbf{xx}_k}\mathbf{x}_k

- V_{\mathbf{xx}_k}\mathbf{\bar{f}}_k). \end{eqnarray}

For more details about the feasibility-driven differential dynamic programming algorithm see:

@inproceedings{mastalli-icra20,

author = {Mastalli, C. and Budhiraja, R. and Merkt, W. and Saurel, G. and Hammoud, B. and Naveau, M. and Carpentier, J. and Righetti, L. and Vijayakumar, S. and Mansard, N.},

title = {{Crocoddyl: An Efficient and Versatile Framework for Multi-Contact Optimal Control}},

booktitle = {IEEE International Conference on Robotics and Automation (ICRA)},

year = {2020}

}

- See also

SolverDDP(), backwardPass(), forwardPass(), expectedImprovement() and updateExpectedImprovement()

Definition at line 58 of file fddp.hpp.